Design Tools Software Niebel Instructor Edition Cycle Time

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

3

Tools for Virtual Design and Manufacturing

Five technical domains have been identified in which virtual design and manufacturing tools exist or where important areas of knowledge and practice are supported by information technology: systems engineering, engineering design, materials science, manufacturing, and life-cycle assessment. However, progress is needed in order to more fully take advantage of these models, simulations, databases, and systematic methods. Each of the domains is largely independent of the others, although links are being made, bridges are being built, and practitioners and researchers in each domain recognize the value of knowledge in some of the other domains. Intercommunication and interoperability are two prerequisites for serious progress. Formidable technical and nontechnical barriers exist, and the committee offers recommendations in each domain.

TOOL EVOLUTION AND COMPATIBILITY

Throughout human history tools have evolved, typically driven by technological availability, market dynamics, and fundamental need. In agriculture, teams of oxen have been replaced by sophisticated tractors with specialized attachments. Computing tools have morphed from fingers and toes to abacuses to slide rules to calculators to high-performance computers. The software used within these computing systems has evolved in terms of programming levels of abstraction and overall functionality. Software not only is written as an end item that operates within a product, but now also gets developed as models and simulations to emulate the end item itself in order to perfect its eventual production, field use, and retirement. Software-based tools are developed to create and use these models and simulations to best perform design, engineering analyses, and manufacturing. Table 3-1 lists examples of available tools and the areas in which they operate.

Advanced engineering environments (AEEs) are integrated computational systems and tools that facilitate design and production activities within and across organizations. An AEE may include the following elements:

-

Design tools such as computer-aided design (CAD), computer-aided engineering (CAE), and simulation

-

Production tools such as computer-aided manufacturing (CAM), manufacturing execution system, and workflow simulation

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

TABLE 3-1 Representative Tools Used in the Industry

System Life Cycle

Engineering/Technical Cost Analysis

systems Engineering

| Activity | Marketing | Product Engineering | Industrial Engineering | Marketing | ||||

| Function Action | Mission, or Customer Needs Requirements Analysis | Product Planning Functional Analysis | Product Architecture Synthesis | Engineering Design Analysis, Visualization, and Simulation | Manufacturing Engineering Analysis and Visualization | Manufacturing Operations Production and Assembly | Field Operations Use, Support, and Disposal | |

| Business Case | Forecasting | @Risk, Crystal Ball, Excel, i2, Innovation Management, JD Edwards, Manugistics, Oracle, PeopleSoft, QFD/Capture, RDD-SD, SAP, Siebel | Arena PLM, Eclipse CRM, Innovation Management, MySAP PLM, RDD-SD, specDEV, TRIZ | Arena PLM, Innovation Management, MySAP PLM, RDD-IDTC, specDEV, TRIZ | Arena PLM, Innovation Management, MySAP PLM, RDD-IDTC, specDEV, TRIZ | Arena PLM, Innovation Management, MySAP PLM, specDEV, TRIZ | Arena PLM, MySAP PLM, specDEV, TRIZ | Innovation Management |

| Product Life-Cycle Planning and Management | Innovation Management, QFD/Capture, RDD-RM | Geac, I-Logix, Innovation Management, Invensys, JD Edwards, Oracle, PeopleSoft, RDD-SA, SAP, Windchill | Innovation Management, RDD-SD | Functional Prototyping, RDD-SD | Functional Prototyping | Innovation Management, RDD-SD | ||

| Resource Planning | Project, RDD-DVF, RDD-SD, TaskFlow Management | Innovation Management, RDD-DVF, RDD-SD | DSM, Geac, Invensys, JD Edwards, Oracle, People Soft, RDD-SD, SAP, TaskFlow Management | DSM, Project, RDD-SD, TaskFlow Management | TaskFlow Management, HMS-CAPP | TaskFlow Management | TaskFlow Management | |

| Computer-aided engineering | Modeling | Caliber, DOORS, RDD-SD, RDD-OM, Innovation Management, Statemate | Caliber, DOORS, Innovation Management, RDD-OM, Statemate | ADAMS, Caliber, DADS, DOORS, Dynasty, EASA, Engineous, Innovation Management, LMS, MatLab, MSC, Opnet, Phoenix, RDD-OM, RDD-SD, Statemate, VL | Abaqus, AML, Ansys, AutoCAD, AVL, Caliber, CATIA, DOORS, EASA, EDS, Engineous, Fluent, Functional Prototyping, IDEAS, MSC, Opnet, Phoenix, ProE, RDD-SD, StarCD, Statemate, Unigraphics, Working Model | Caliber, DFMA, DOORS, Functional Prototyping | Caliber, DOORS | Caliber, DOORS, Innovation Management |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

System Life Cycle

Engineering/Technical Cost Analysis

Systems Engineering

| Activity | Marketing | Product Engineering | Industrial Engineering | Marketing | ||||

| Function Action | Mission, or Customer Needs Requirements Analysis | Product Planning Functional Analysis | Product Architecture Synthesis | Engineering Design Analysis, Visualization, and Simulation | Manufacturing Engineering Analysis and Visualization | Manufacturing Operations Production and Assembly | Field Operations Use, Support, and Disposal | |

| Computer-aided engineeing | Simulation | Caliber, DOORS, Innovation Management, RDD-DVF, Statemate | Caliber, DOORS, Innovation Management, RDD-DVF, Statemate, Working Model | Caliber, DOORS, CATIA, Delmia V5, Enovia V5, RDD-SD, Innovation Management, Statemate | Abaqus, AML, ANSoft, Ansys, Caliber, DICTRA, DOORS, DYNA3D, EASA, EDS, Engineous, Functional Prototyping, ICEM CFD, LMS, ModelCenter, MSC, NASTRAN, Phoenix, RDD-SD, Statemate, Stella/Ithink | Caliber, DOORS, Functional Prototyping, HMS-CAPP | Caliber, DOORS | Caliber, DOORS, Innovation Management |

| Visualization | Innovation Management, RDD-OM, Statemate | Innovation Management, RDD-OM, RDD-SD, Statemate | CATIA, Delmia V5, EDS, Enovia V5, Innovation Management, Jack, RDD-SA, Slate, Statemate | Abaqus, ACIS, Amira, Ansys, EDS, EnSight, Fakespace, Functional Prototyping, Ilogix, Jack, MatLab, Open-DX, RDD-SD, Rhino, SABRE, Simulink, Slate, Statemate, VisMockup | Functional Prototyping, Statemate | Innovation Management | ||

| Computer-aided manufacturing | Product Data Management | Innovation Management | Innovation Management | CATIA, Delmia V5, Enovia V5 | CATIA, Dassault, Delmia V5, EDS, Enovia V5, Metaphase, PTC, Windchill | Innovation Management | ||

| Electronic Design Automation | Caliber, DOORS | Caliber, DOORS, MatLab | Caliber, Doors, Integrated Analysis, Simulator, Verilog-XL | Cadence, Caliber, Dassault, DOORS, Integrated Analysis, Neteor Graphics, PTC, System Vision | Caliber, DOORS | Caliber, DOORS, PADS | Caliber, DOORS, Integrated Analysis | |

| Manufacturing System Design | Functional Prototyping, RDD-ITDC, RDD-SD | Innovation Management, RDD-ITDC, RDD-SD | Integrated Data Sources, RDD-ITDC, RDD-SD | CimStation, Envision/Igrip, Integrated Data Sources, RDD-ITDC, RDD-SD | CIM Bridge, EDS, Tecnomatix | Functional Prototyping | ||

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

| Computer-aided manufacturing | Manufacturing System Modeling | Functional Prototyping, RDD-ITDC, RDD-SD | Functional Prototyping, RDD-ITDC, RDD-SD | DICTRA, Functional Prototyping, Pandat, RDD-ITDC, RDD-SD, Thermo-Calc | Abinitio, CimStation, Dante, DEFORM, Envision/Igrip, Functional Prototyping, MAGMA, ProCast, RDD-ITDC, RDD-SD, SysWeld | Abinitio, Arena, Dante, DEFORM, Extend, Functional Prototyping, MAGMA, Pro/ Model, ProCast, Simul8, SysWeld, TaylorED, Witness | Abinitio, Dante, DEFORM, Functional Prototyping, MAGMA, ProCast, SysWeld | Functional Prototyping |

| Manufacturing System Simulation | Functional Prototyping | Caliber, DOORS, Functional Prototyping | Abinitio, Caliber, CimStation, Dante, DEFORM, DOORS, Envision/Igrip, MAGMA, ProCast, SysWeld | Abinitio, Arena, Caliber, Dante, DEFORM, DOORS, Extend, MAGMA, Pro/Model, ProCast, Simul8, SysWeld, TaylorED, Witness | Abinitio, Dante, DEFORM, MAGMA, ProCast, SysWeld | |||

| Manufacturing System Visualization | Functional Prototyping | Functional Prototyping | Functional Prototyping | CimStation, Envision/Igrip, Functional Prototyping | Arena, Extend, Functional Prototyping, Pro/Model, Simul8, Taylor ED, Witness | Abinitio, Functional Prototyping, MAGMA, ProCast, SysWeld | Functional Prototyping | |

| Reliability Models | RDD-ITDC, RDD-SD | Functional Prototyping, RDD-ITDC, RDD-SD | DEFORM, DisCom2, Functional Prototyping, RDD-ITDC, RDD-SD | CASRE, Functional Prototyping, RDD-ITDC, RDD-SD | JMP, Minitab, SAS, WinSMITH | RDD-ITDC, RDD-SD | ||

| Logistics | Eclipse ERP, Integrated Analysis, RDD-ITDC, RDD-SD | Integrated Analysis, RDD-ITDC, RDD-SD | RDD-ITDC, RDD-SD | Integrated Analysis, RDD-ITDC, RDD-SD | Integrated Analysis | JD Edwards, Logistics, Manugistics | Integrated Analysis, RDD-ITDC, RDD-SD | |

| Purchasing | Purchasing plus | I2, Invensys, JD Edwards, Oracle, PeopleSoft, PTC, SAP | ||||||

| Supervisory Control | QUEST | Invensys, Siemens | ||||||

| Machine Control | Virtual NC | Labview, MATLAB, Unigraphics |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

-

Program management tools such as configuration management, risk management, and cost and schedule control

-

Data repositories storing integrated data sets

-

Communications networks giving participants inside and outside the organization secure access to data

As shown in Table 3-1, most of these tools exist today, but an AEE is more than just a collection of independent tools. Tools must be integrated to provide interoperability and data fusion. Organizational and interorganizational structures must be configured to reward their use and workforce skills must be enhanced to make effective use of their capabilities.1

The Carnegie Mellon University Software Engineering Institute (SEI) studied the use of AEEs and concluded that they exist within a broad domain, across all aspects of an organization. AEEs provide comprehensive coverage of and substantial benefits to design and manufacturing activities:

-

Office applications such as word processing, spreadsheets, and e-mail, are already familiar to nearly everyone.

-

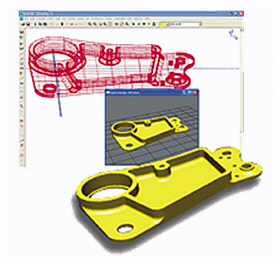

Computer-aided design and integrated solid modeling not only improve the quality of the engineering product but also provide the basis for the exchange of product data between manufacturers, customers, and suppliers.

-

Computer-aided engineering enables prediction of product performance prior to production, providing the opportunity for design optimization, reducing the risk of performance shortfalls, and building customer confidence.

-

Manufacturing execution systems provide agile, real-time production control and enable timely and accurate status reporting to customers.

-

Electronic data interchange provides up-to-date communication of business and technical data among manufacturers, customers, and suppliers.

-

Information security overlays all operations to keep data safe.

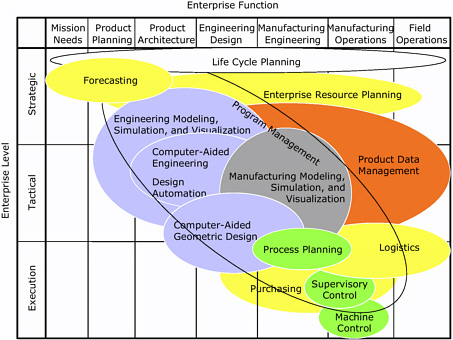

Figure 3-1 is a modification of an SEI chart presented to the committee that helps show the widespread and pervasive use of software that bridges many functions and levels throughout the design and manufacturing enterprise. Enterprise viewpoints concentrate on near-term, mid-term, and far-term perspectives in the context of factory floor execution, tactical analysis, and strategic thinking, respectively.

A product's evolution typically is split into many phases to show its various stages, and most tools can be categorized in terms of the temporal nature of their use. In this case, the committee has elected to view a product's life cycle as shown here in seven stages, from mission needs to field operations. Figure 3-1 shows that there is little overlap between manufacturing modeling and simulation tools, or manufacturing process planning, and engineering design tools, reflecting the lack of interoperability between these steps with currently available software.

Many vendors sell tools that are now beginning to offer intriguing solutions toward overlap of key functions. Table 3-1 shows representative examples of some of these tools now being used in industry.2 For example, to address CADCAE interoperability, process integration and

| 1 | National Research Council, Advanced Engineering Environments: Achieving the Vision, Phase 1, National Academy Press, Washington, D.C., 1999. |

| 2 | In addition, Appendix C describes some of the current engineering design tools and Appendix D provides a list of representative vendors of computer-based tools used for design and other functions. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-1 Overlay of tools that bridge design and manufacturing. Each ellipse within the chart represents a different tool category. Ellipse size connotes the comprehensiveness of the capabilities of those tools within the matrix, and color shading (or lack thereof) highlights the focus of the various tools' strengths in design, manufacturing, business operations, or management. Blue shades indicate a concentration in design, while green trends into manufacturing. Yellow hues show a proclivity toward business operations. Orange indicates the prominence and importance of data management. Ellipses void of color detail project management functions. Source: Special permission to reproduce figure from "Advanced Engineering Environments for Small Manufacturing Enterprises," © 2003 by Carnegie Mellon University, is granted by the Software Engineering Institute.

design optimization software tools that bundle discrete tools in order to facilitate multiprocess optimization are being introduced. Examples of such software are Synaps/Epogy, Isight, and Heeds from Red Cedar Technology. While these software packages look attractive in principle, human input still becomes essential to bridge the gaps between various analytical tools.

This chapter covers in depth the state of affairs within each of five different tool categories:

-

The section titled "Systems Engineering Tools" explains how philosophies are expanding from narrow discrete-element minimization to design-trade-space optimization strategies and, while many tools exist within their own specialized field, recommends the need for supervisory control and common links between individual routines.

-

"Engineering Design Tools" discusses the current capabilities of engineering design methods and software and their general lack of interoperability. It makes recommendations to improve communication between design and manufacturing software so that engineering models can be exchanged and simulated in multiple

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

-

environments.

-

"Materials Science Tools" describes how properties of materials limit the design process and recommends improved physical models and property databases to support virtual design and manufacturing.

-

"Manufacturing Tools" portrays advances in software ranging from detailed process planning and simulation models through production and enterprise management systems, focusing explicitly on issues related to the scope and scale of tools to design for X (DfX), where X is a variety of manufacturing parameters. It recommends organizational and algorithmic approaches for addressing obstacles.

-

"Life-Cycle Assessment Tools" measures the total environmental impact of manufacturing systems from the extraction of raw materials to the disposal of products and evaluates product and process design options for reducing environmental impact.

SYSTEMS ENGINEERING TOOLS

The phrase, topic, and discipline "systems engineering" in the context of industrial manufacturing has evolved over the last several decades such that it now includes more topics and encompasses a far greater portion of the product life cycle than Henry Ford probably could have envisioned in 1914. By 1980, systems engineering thinking in this context was expanding; but it was still essentially limited to the industrial engineering skill of maximizing production to minimize cost by minimizing the time required to perform each individual manufacturing step or assembly action. The underlying assumption was that minimizing the time required for each discrete event would also minimize the total cost to manufacture an item. As such, the concepts were not applicable until production commenced and then they were only applied to minimize the cost after the product was designed and the manufacturing process or assembly line was defined. Systems engineering and the discrete event minimization strategy in the early 1900s could not have predicted Henry Ford's departure from a traditional batch assembly philosophy to the assembly line concept. Even though the resultant unit cost was dramatically reduced, the significant increases in time to first article, cost to design, and cost of construction were seen as insurmountable barriers. The assembly line was an unpredictable revolutionary change from the evolutionary manufacturing improvements associated with discrete event minimization.

During the last two decades, systems engineering has evolved to include the cost of automated machine tools as alternatives to labor and has developed several very different cost profiles; but the optimizations were still being performed at the simple part or discrete work element level. And the evaluations were being conducted on an essentially static, or already designed and about to be built, factory. While computers had become readily available in the 1980s, there were no fundamental changes in the process of minimizing the discrete events to minimize the total cost. The computers only crunched more numbers. Today's hardware and software are capable of simulating multiple, if not essentially unlimited, factory designs and equipment variations, giving the systems engineer the ability to affect both prior to a factory's construction.

When the full costs of labor, shipping, and work in process are included in the evaluations, the systems engineer can also affect the manufacturing site selection. But the same discrete-element minimization mentality remains. Current thinking and research in systems engineering are beginning to expand the scope from focusing on discrete work elements to analyzing entire operations, lines, factories, or enterprises to optimize the total cost of a given design or set of designs. With the continuously increased speed and lowered cost of computing, this is generally possible. But the task is being performed by brute-force methodology whereby all known permutations and combinations of discrete events are tried and all but the best are excluded.

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-2 Expanded systems engineering phases. Source: B.S. Blanchard and W.F. Fabrycky, Systems Engineering and Analysis, 3rd Edition, © 1998. Reprinted by permission of Pearson Education Inc., Upper Saddle River, N.J.

During the last 10 years, systems engineering has matured to the point that it is not an uncommon degree program in universities. Industry and defense both utilize the discipline, and there is a globally recognized organization that represents the practitioners. The International Council on Systems Engineering (INCOSE) defines the subject as "… an interdisciplinary approach and means to enable the realization of successful systems." Further, INCOSE lists seven functional areas included in systems engineering (operations, performance, testing, manufacturing, cost and scheduling, training and support, and disposal).3

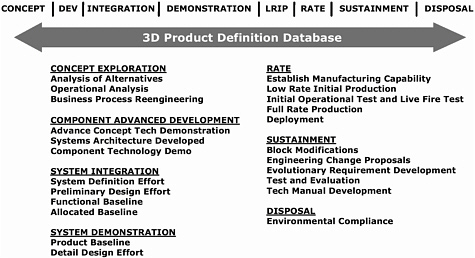

Blanchard and Fabrycky bring many of the systems engineering concepts and phases together in their book as shown in Figure 3-2. Other authors have described systems engineering as having four (Figure 3-3), seven (Figure 3-1), and even eight (Figure 3-4) phases. The important factors to observe from all this are that systems engineering can include everything from determination of the need for a product to its disposal, and that there are significant overlapping phases (notably design and manufacturing) that require interconnections and the sharing of data and information.

Engineering Cost Analysis

The next logical advance is what is referred to as an engineering or technical cost analysis. In its simplest form, it may be no more than a spreadsheet listing the phases found in the product concept through product realization cycles on one axis and identifying the many functional areas, costs, or even software tools on the other. This committee elected to settle on seven phases and portray the traditional flow of effort (time) from left to right as shown in Table 3-1: function; mission or customer needs; product planning; product architecture; engineering design; manufacturing engineering; manufacturing operations; and field operations.

| 3 | International Council on Systems Engineering. Available at: http://www.incose.org. Accessed April 2004. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-3 Life-cycle phases collapsed into four. Source: R. Garrett, Naval Surface Warfare Center, "Opportunities in Modeling and Simulation to Enable Dramatic Improvements in Ordnance Design," presented to the Committee on Bridging Design and Manufacturing, National Research Council, Washington, D.C., April 29, 2003.

A significant number of companies are already identifying where there is a need to communicate and work together both within the company divisions and with other companies. In its second-generation form, engineering cost analysis software will approximate the costs associated with each phase of the product development–realization cycle. In its ultimate form, the engineering cost analysis will include and improve upon all of systems engineering's current discrete event optimization functions; but, more importantly, it will extend forward in time to include accurate estimates for various design, material, and process selection options. In some instances, it may also include the determination of the optimum product concept to satisfy the intended customers' needs and cost constraints. Numerous presentations to the committee made the point that increased and improved communications between all phases will significantly reduce the time from concept to first article.

Some examples of time savings that have already been achieved were presented to the committee:4

-

Chrysler, Ford, and GM have reduced the interval from concept approval to production from 5 to 3 years.

-

Electric Boat has been able to cut the time required for submarine development in half—from 14 years to 7 years.

-

38 Sikorsky draftsmen took 6 months to develop working drawings of the CH-53E Super Stallion's outside contours. With virtual modeling and simulation, a single engineer accomplished the same task for the RAH-66 Comanche Helicopter in 1 month.

-

14 engineers at the Tank and Automotive Research and Development Center designed a low-silhouette tank prototype in 16 months. By traditional methods this would have taken 3 years and 55 engineers.

-

Northrop Grumman's CAD systems provided a first-time, error-free physical mockup of many sections of the B2 aircraft.

-

The U.S. Navy's modeling and simulation processes for the Virginia-class submarine reduced the standard parts list from ~95,000 items for the earlier Seawolf-class submarine to ~16,000 items.

It is necessary to provide the engineer at the CAD terminal with new and improved software tools that can give guidance regarding the life-cycle costs of each design decision in both preliminary and detailed design. For example, specific data could be made available to the designer regarding the alternative costs of various manufacturing approaches such as

| 4 | M. Lilienthal, "Observations on the Uses of Modeling and Simulation," presented to the Committee on Bridging Design and Manufacturing, National Research Council, Washington, D.C., February 24, 2003. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-4 Life-cycle phases expanded into the eight indicated at the top of the figure. Source: A. Adlam, U.S. Army, "TACOM Overview," presented to the Committee on Bridging Design and Manufacturing, National Research Council, Washington, D.C., June 25-26, 2003.

automatic tape lay-up, injection molding, or electron beam welding, which could be selected to reduce unit manufacturing costs. In addition, reliability data for such proven components as hydraulic actuators, electrical connectors, and generators could easily be made available through interconnected databases to achieve a first-cut design that was reliable, maintainable, and low cost. This would be a huge step towards giving customers low total life-cycle costs.

It is critical to note, and generally ignored, that the geometrical shape of a part or assembly will determine the subsequent manufacturing processes by which it may be made and will, inadvertently, limit the materials to just those few that are suitable for those processes. This limitation has led to the rule of thumb that the majority of cost reduction opportunities are lost at the time a part is designed. Frequently, multiple design concepts or design/material combinations will satisfy a desired function. For that reason, it is imperative that all design options, along with their associated manufacturing processes and materials, be evaluated prior to committing to a final design strategy.

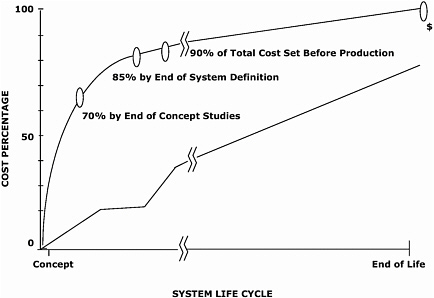

Again, several presentations to this committee emphasized the importance of improving the assessment of needs and the exploration of the design trade space and a means to minimize total life-cycle costs. Figure 3-5 shows one author's views on when and where the full cost of a product is locked in. Other authors provided additional guidelines to support the value of up-front design analysis.

Some guidelines presented to the committee5 , 6 are listed below:

| 5 | J. Hollenbach, "Modeling and Simulation in Aerospace," presented to the Committee on Bridging Design and Manufacturing, National Research Council, Washington, D.C., February 24, 2003. |

| 6 | A. Haggerty, "Modeling the Development of Uninhabited Combat Air Vehicles," presented to the Committee on Bridging Design and Manufacturing, National Research Council, Washington, D.C., April 29, 2003. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-5 Product cost locked in very early in process. Source: M. Lilienthal, Defense Modeling and Simulation Office, "Observations on the Uses of Modeling and Simulation," presented to the Committee on Bridging Design and Manufacturing, National Research Council, Washington, D.C., February 24-25, 2003.

-

Continue the early collaborative exploration of the largest possible trade space across the life cycle, including manufacturing, logistics, time-phased requirements, and technology insertion.

-

Perform assessments based on modeling and simulation early in the development cycle—alternative system designs built, tested and operated in the computer before critical decisions are locked in and manufacturing begins.

-

Wait to develop designs until requirements are understood.

-

Requirements are the key. Balance them early!

-

Once the design is drawn, the cost and weight are set.

-

No amount of analysis can help a bad design get stronger or cheaper.

-

Remember that 80 percent of a product's cost is determined by the number of parts, assembly technique, manufacturing processes, tooling approach, materials, and tolerances.

The linkages between design, manufacturing, and materials, combined with the value of reaching the customer in the least amount of time, support a robust business case for quick development of initial products. This increased effort at the start would be at a higher than optimal initial cost, but with scheduled updates and design changes would result in future improved reliability and cost while maintaining service part commonality. This approach could also result in the discovery of design and manufacturing strategies corresponding to an immediate need; when the product development cycle is shortened, products can be designed

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

to be more responsive to specific customer requirements.7 Design and manufacturing concepts that factor in the initial cost to design and manufacture as well as the cost to maintain production and service parts far into the future could be discovered as well.

In its early forms, engineering cost analysis will be forced to simplify the details of most steps of the process, from concept to realization, by assuming generalized time and cost models. While this may seem crude, a manufacturing engineer frequently can make a relatively accurate estimate of a part cost based on its general shape, size, and function, just as a product engineer can provide a similarly accurate estimate of the cost to design a product (or number of parts) based on its complexity, size, and intended use. In this early form, the engineering cost analysis is unlikely to provide an accurate final cost, but it is expected to accurately rank the various options examined. As computing power and cost continue to follow Moore's law and as individual program data input/output structures are modified to complement each other, more refinements and accuracy will be obtained and more options may be explored. However, today's state of the art in cost analyses is still inadequate. Development of refined cost analysis tools is vitally needed in the aerospace industry where "dollars per pound" of airframe is still used for many calculations.

Whether engineering cost analysis is only another in a series of evolutionary improvements in the industrial systems engineering business process or truly a step function improvement, there will still be significant unknowns and effort required to bring it to fruition and realize its value. Unifying the description of parts (two-dimensional vs. three-dimensional, or solid vs. surface), characterizing manufacturing process effects, designing and developing materials and verifying their properties, creating complementary interprogram data structures, and developing virtual visualization tools will not be easy and will require significant research. However, these developments still only require appropriate funding, time, and discipline to complete. In order to take advantage of these developments, it will be necessary to change the design and manufacturing business culture, so that it focuses on the total life-cycle cost rather than the cost of discrete events. Without this change, designers and manufacturing engineers will remain within their disciplines and continue to suboptimize their portion at the expense of the whole.

Manufacturing Cost Modeling

During conceptual design and concurrent with all other design-for-X (DfX) activities, the life-cycle cost of a product should be addressed. Twenty years ago engineers involved in the design of products may not have concerned themselves with the cost-effectiveness of their design decisions; that was someone else's job. Today the world is different. All engineers in the design process for a product are also tasked with understanding the economic trade-offs associated with their decisions. At issue are not just the manufacturing costs but also the costs associated with the product's life cycle.

Several different types of cost-estimating approaches are potentially applicable at the conceptual design level where engineering decisions about the technology and material content of a product are made.

Traditional material cost analysis uses parametric methods to determine the quantity of a material required in a product. The model then applies a cost policy that includes how the manufacturer does quoting, inventory methods, and method of purchase of commodity materials. To determine the total manufacturing cost, material costs are combined into traditional cost accounting methods where labor costs are included and overhead is applied. Variations in how material, labor, and overhead costs are computed and combined abound and

| 7 | An example might be a switch from a desert to an arctic conflict or simply between armed conflicts. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

are summarized in the following paragraphs.

Activity-based costing8 (ABC) focuses on accurate allocation of overhead costs to individual products. Other methods include function or parametric costing9 in which costs are interpolated from historical data for a similar system. Similarly, empirical or cost-scaling methods10 are parametric models based on a feature set. Parametric-based models are applicable to evolutionary products where similar products have previously been constructed and high-quality and large-quantity historical data, exist.

Sequential process flow models11 attempt to emulate the actual manufacturing process by modeling each step in sequence and are particularly useful when testing, reworking, and scrapping occurs at one or more places in the process. Resource-based cost modeling12 assesses the resources of materials, energy, capital, time, and information associated with the manufacture of a product and aims to enable optimum process selection. Resource-based modeling is similar to the specific process step models embedded within sequential process flow models. Each process step model sums up the resources associated with the step—labor, materials, tooling, equipment—to form a cost for the step that is accumulated with other steps in sequence. Resource-based modeling is the same as sequential step modeling except that use of a specific sequence is not necessarily required.

Technical cost modeling carries cost modeling one step further by introducing physical models associated with particular processes into the cost models of the actual production activities. Technical cost modeling13 also incorporates production rate information.

With the advent of products such as integrated circuits, whose manufacturing costs have smaller materials and labor components and greater facilities and equipment (capital) components, new methods for computational cost modeling have appeared such as cost of ownership (COO).14 Cost of ownership modeling is fundamentally different from sequential process flow cost modeling. In a COO approach, the sequence of process steps is of secondary interest; the primary interest is determining what proportion of the lifetime cost of a piece of equipment (or facility) can be attributed to the production of a single piece part. Lifetime cost includes initial purchase and installation costs as well as equipment reliability, utilization, and defects introduced in products that the equipment affects. Accumulating all the fractional lifetime costs of all the equipment for a product gives an estimate of the cost of a single unit of the product. Labor, materials, and tooling in COO are included within the lifetime cost of particular equipment.

As one might expect, there are pros and cons associated with all the approaches outlined above. Also, nearly all of the basic manufacturing cost models are supplemented by yield models, learning curve models, and test/rework economic models. Many commercial vendors exist for manufacturing cost modeling tools. Some examples are listed here:

| 8 | P.B.B. Turney, "How Activity-Based Costing Helps Reduce Cost," Journal of Cost Management for the Manufacturing Industry, Vol. 4, No. 4, pp. 29-35, 1991. |

| 9 | A.J. Allen, and K.G. Swift, "Manufacturing Process Selection and Costing," Proceedings of the Institute of Mechanical Engineers Part B—Journal of Engineering Manufacture, Vol. 204, No. 2, pp. 143-148, 1990. |

| 10 | G. Boothroyd, P. Dewhurst, and W.A. Knight, Product Design for Manufacture and Assembly, Marcel Dekker, Inc., 1994. |

| 11 | C. Bloch and R. Ranganathan, "Process Based Cost Modeling," IEEE Transactions on Components, Hybrids, and Manufacturing Technologies, pp. 288-294, June 1992. |

| 12 | A.M.K. Esawi and M.F. Ashby, "Cost Ranking for Manufacturing Process Selection," Proceedings of the 2nd International Conference on Integrated Design and Manufacturing in Mechanical Engineering, Compiègne, France, 1998. |

| 13 | T. Trichy, P. Sandborn, R. Raghavan, and S. Sahasrabudhe, "A New Test/Diagnosis/Rework Model for Use in Technical Cost Modeling of Electronic Systems Assembly," Proceedings of the International Test Conference, pp. 1108-1117, November 2001. |

| 14 | R.L. LaFrance and S.B. Westrate, "Cost of Ownership: The Suppliers View," Solid State Technology, pp. 33-37, July 1993. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

-

Cognition—process flow cost modeling

-

Wright Williams & Kelly—cost of ownership modeling

-

Savantage—conceptual design cost modeling

-

ABC—ABC cost modeling

-

IBIS—technical cost modeling

-

Prismark—Niche parametric manufacturing cost modeling (for printed circuit boards)

Another area that is often overlooked is cost modeling for software development. Systems are a combination of hardware and software. Ideally hardware and software are "codesigned." Codesign allows an optimum partitioning of the required product functionality between hardware and software. Cost needs to be considered when these partitioning decisions are made. Several commercial tools allow the cost of developing new software, qualifying software, rehosting software, and maintaining software to be modeled. Historically, many of these tools are based on a public domain tool called COCOMO15 and later evolutions of it.

Life-Cycle Cost Modeling

While manufacturing costing is relatively mature, life-cycle cost modeling is much less developed. For many types of products, manufacturing costs only represents a portion, sometimes a small portion, of the cost of the product. Nonmanufacturing life-cycle costs include design, time-to-market impacts, liability, marketing and sales, environmental impact (end of life), and sustainment (reliability and maintainability effects). While reliability has been addressed by conventional DfX activities, rarely are other sustainability issues such as technology obsolescence and technology insertion proactively addressed.

There are existing commercial tool vendors in the life-cycle cost modeling space as well; for example:

-

Price Systems: parametric life-cycle management costing

-

Galorath: parametric life-cycle management costing (SEER tools)

-

NASA: well developed parametric cost modeling capabilities

One particular example of a life-cycle cost contributor for many types of systems is the lack of design-level treatment of technology obsolescence and insertion. This problem is already pervasive in avionics, military systems, and industrial controls and will become a significant contributor to life-cycle costs of many other types of high-technological-content systems within the next 10 years. Unfortunately, technology obsolescence and insertion issues cannot be treated during design today because methodologies, tools, and fundamental understanding are lacking.

Systems Engineering Issues

Box 3-1 shows four case studies of the use of systems engineering software tools in industry. In addition, the National Defense Industrial Association Systems Engineering Division Task Group Report,16 issued in January, 2003, listed the top five issues in systems engineering:

| 15 | B.Boehm, Software Engineering Economics, Prentice-Hall, Inc., Upper Saddle River, N.J., 1981, p. 1. |

| 16 | The National Defense Industrial Association, The National Defense Industrial Association Systems Engineering |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

-

Lack of awareness of the importance, value, timing, accountability, and organizational structure of systems engineering programs

-

Lack of adequate, qualified resources within government and industry for allocation within major programs

-

Insufficient systems engineering tools and environments to effectively execute systems engineering within programs

-

Inconsistent and ineffective application of requirements definition, development, and management

-

Poor initial program formulation

Similarly to curriculum modification in the educational system, the business and employee reward system will also need overhauling to ensure that it rewards those who think strategically rather than those who function in the old but safe ways. So long as the ones receiving the greatest rewards are the designers who turn out the greatest number of prints and models, or the purchasing agents who negotiate the lowest price for a given part, process, or material, no one can justify spending any significant amount of time or effort in the development of a better method to achieve design goals.

Initially, this cultural change may require a totally separate organizational unit with a reward structure tailored to recognize enterprise successes rather than discrete events. To be fully successful, this new culture ultimately has to infect all levels and units of an organization. For that to happen incentives are needed for the manufacturing leadership to change both itself and the culture. The saving aspect of such a sea-change is that, once it is a part of the culture, all the participants—both old and new—will win. The need to focus a portion of product development on minimizing time and costs in the traditional ways will remain but will be incorporated into the larger picture.

Systems Engineering Opportunities

While the committee found many areas where there is need for data structure, program interconnectivity, and visualization, the entire area of systems engineering presents new opportunities to rethink what process an organization or institution utilizes as it proceeds from the needs assessment phase through design and manufacturing to use, support, and ultimately disposal. Several significant and valuable characteristics become routine through the rigorous application of systems engineering. A few of the most significant are listed here:

-

Forces discipline

-

Creates multidimensional, conceptual design trade space

-

Enables multidisciplinary optimization

-

Promotes and requires interoperability

Other related factors include the following:

-

Forces cultural change in rewards

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

-

Tools exist but not in widespread use

-

Training required (academic and industrial)

Box 3-1

Four Case Studies on the Use of Systems Engineering Software Tools

Case Study 1

A simple example that illustrates trade-offs in design is the device that locates and carries the bearings and wheels that support the track on a tracked vehicle (such as a tank). This carrier can be designed either as an assembly of welded plates or as a casting. The welded version can be designed and placed into production in a fraction of the time required for a casting but is more costly to produce. An engineering cost analysis could predict that, for initial production and for long-term future service parts availability, the welded assembly would be preferred. That same analysis could predict that for many large-scale production applications, a casting would be preferred. The costs and value of each option could be evaluated and the lowest total cost option selected. And that analysis could predict that both options need to be developed: the welded version for initial production, and a scheduled update to replace it with a casting for the majority of its production life followed by reverting to a weldment when it goes into service-parts-only production.

Case Study 2

Siemens Transportation Systems utilized collaborative virtual product development software to create a complete cross-functional product definition and system-level simulation environment to validate total product functionality during the crucial concept phase of railway car manufacturing. Heinz-Simon Keil, Department Head, Corporate Technology, Production Processes Virtual Engineering: "… functional prototyping has enabled Siemens Transportation Systems to accelerate the overall virtual prototyping process and correct potentially costly errors on the fly before such errors are discovered in manufacturing." a

Case Study 3

Conti Temic Product Line Body Electronics utilized software tools to standardize model-based development for electronic control units (ECUs). It selected an integrated tool suite, the Statemate MAGNUM and Rhapsody in MicroC tool chain (or suite), to graphically specify designs, improve communication within its development team and with customers, reduce time to market through component reuse, and reduce costs by validating systems and software designs up front prior to implementation. This integrated tool suite provides Conti Temic with a development process from requirements to code, ensuring its applications are complete and accurate.

Conti Temic Body Electronics committed to the model-based approach due to the complexity and diversity of body control ECUs. Conti Temic system engineers create formal requirements specifications and then test their models in a virtual prototyping environment, ensuring that their ECU models are error free. The system behavior is validated as an integral part of the design process,

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

before anything is built. Conti Temic is able to execute, or simulate, the design—either complete or partially complete—prior to implementation. Analyzing its specifications up front, Conti Temic ensures that behavior is correct and captures test data that will be used later to test the implementation. Conti Temic benefits from the ability to automatically generate high-quality target code, easily capture and use features or functions throughout the development process, and automatically generate documentation from the completed specification model. Working with supplier requirements, Conti Temic is able to visually express systems functionality and ensure, up front in the design process, that the product will meet the specifications.

In many cases, automotive original equipment manufacturers (OEMs) provide Conti Temic actual requirements models. The use of a standard tool between OEM and supplier could greatly reduce miscommunication common within the automotive industry. "In addition to facilitating communication, Conti Temic Body Electronics is able to quickly make changes and ensure their designs are solid if issues arise in ECU integration, functionality is changed late in the game, cost reductions are mandated, or variant derivation has to be incorporated and validated within the product," said Andreas Nagl, Software-Engineering, Conti Temic Product Line Body Electronics. "Due to the complexity of our products, the synchronization effort for requirements, analysis/design models, code and test cases increased exponentially with conventional static CASE tools. The Statemate MAGNUM and Rhapsody in MicroC tool chain (or suite), unlike static CASE tools, allows us to 'feel' the systems behavior, make changes on the fly, validate our design, generate code and conduct tests much more quickly." b

Case Study 4

An aerospace contractor used requirements management software to model the systems architecture of a winner-take-all proposal to build a new cost-efficient AWACS aircraft for the Royal Australian Air Force. The contractor realized that using its standard document-driven methodology would not be successful and so it modeled the architecture at both a high level of abstraction and according to scenarios with lower levels of fidelity. The contractor also modeled its business processes and other key mission-critical items. This allowed the company to uncover and rectify many unforeseen issues significantly earlier in the design process and to reduce the risk to the proposal. The company realized that the use of both static and dynamic modeling had become indispensable to reducing program risk.

The contractor also decided to build multiple segmented and secure baselines for future use because it wanted to capture all of the key design knowledge of this project for use in developing future proposals. This data included classified, vendor proprietary, and customer-specific information that could not be shared in a single repository. This approach reflects the understanding that the ability to capture and reuse design knowledge is critical for long-term program evolution.

In the first design review, the customer found no discrepancy reports and the results of the final design review mirrored the first. The contractor team won the proposal on both technical merit and cost. The cost savings were so significant that the customer was able to purchase additional options once thought beyond its budget. Key to this success was project-level collaboration among all team members—systems engineers, software engineers, and managers—including translating among subcontractor design process methods and standards.

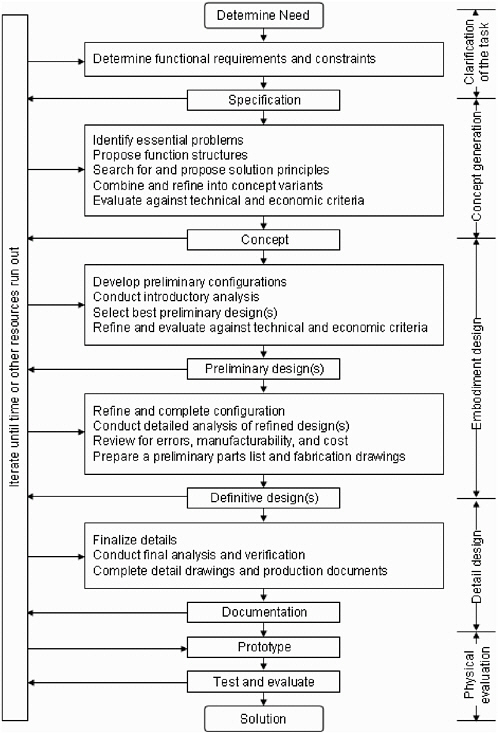

ENGINEERING DESIGN TOOLS

Engineering design is the process of producing a description of a device or system that will provide a desired performance or behavior.Figure 3-6 is a typical chart depicting the steps in this process. The process begins with the determination or assessment of a need. Next, a set of requirements and constraints is established for the device or system, often also including a list of desired performances. At this stage in the process, the engineering team begins to

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

propose alternative configurations. A series of calculations and analyses are performed to estimate how well each of the proposed configurations will satisfy the requirements and constraints, and deliver the desired performances. These analyses typically range from hand calculations to simple analytic expression to more sophisticated modeling and simulation, such as finite element modeling, thermal modeling, and computational fluid dynamics.

The results of these simulations are interpreted by the design engineers and used to refine the proposed configurations, and to reject those that appear to not satisfy the requirements and constraints. It is common to construct at least one prototype to verify the simulated performance of the proposed configuration(s). In this process, design engineers focus their attention primarily on achieving the desired performances, while meeting the requirements and constraints. Questions of manufacturability are typically a secondary consideration during the design process.

Engineering design is an iterative process between every step in the design process. Figure 3-6 does not proceed linearly downward. Instead, there are iterations back and forth between all the steps in the engineering design process, as shown by the long column box at the left in Figure 3-6.

Once a design configuration is selected, analyzed, simulated, prototyped, and validated, the design information is passed to the manufacturing engineers to design the manufacturing systems and processes to fabricate the design in the desired quantities. This manufacturing engineering process entails many of the same steps as in the engineering design process, including the application of sophisticated modeling and simulation.

Relationship of Engineering Design Tools to Manufacturing

Since the device or system must be fabricated in order to deliver a desired performance or behavior, engineering design should be closely linked to manufacturing. Indeed, frequently questions of manufacturability severely limit the range of design options available. However, in current engineering practice, as described above, the link between design and manufacturing is largely informal, based on the knowledge and experience of the engineers involved. While many engineering design and analysis software packages exist, and several powerful manufacturing simulation software packages exist, the link between these two domains remains weak. Appendix C describes some of the current engineering design tools, and Appendix D provides a list of representative vendors of computer-based tools used for design and other functions.

Current Status of the Bridge Between Design and Manufacturing

Figure 3-1 depicts the current status of the link, or bridge, between engineering design and manufacturing. The columns represent many of the identifiable stages in the design of a new product or system, with time proceeding from left to right. The rows indicate whether the tool or process depicted in the diagram contributes to strategic planning and decisions, or applies to tactical decisions and steps, or plays a role in carrying out the execution of the stage in the process. The width of the ellipse surrounding the name of each tool indicates the stages in the process in which the tool can play a role. The height of the ellipse indicates where the tool is effective, along the range from purely strategic planning to execution of individual process steps. Blue ellipses indicate engineering design processes. Green ellipses indicate manufacturing-related processes. Yellow ellipses indicate business functions, orange ellipses indicate data management, and empty ellipses represent overarching processes. The degree of overlap between ellipses indicates how much interaction there should be between tools.

As can be seen in Figure 3-1, engineering modeling, simulation, and visualization can play a strategic role in product architecture planning, and also a tactical role in guiding the detailed design processes. The detailed design process is initiated from the results of product

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-6 Steps in the engineering design process. Source: Adapted from G. Pahl and W. Beitz, Engineering Design, p. 41. Copyright © 1984 Springer-Verlag. Reprinted by permission of Springer-Verlag GmbH, Heidelberg, Germany.

architecture planning, and it results in a precise description of the product to be manufactured. The diagram shows that there is little overlap between manufacturing modeling and simulation tools, or manufacturing process planning, and engineering design tools, reflecting the lack of interoperability between these steps with currently available software. However, there are efforts being made to change this situation.

Box 3-2 shows a case study of an integrated design approach applied to unmanned undersea vehicles (UUVs). Past research and development activities to create a highly flexible

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

and responsive design environment include the Defense Advanced Research Projects Agency's (DARPA) Rapid Design Exploration and Optimization (RaDEO) program.17 Unigraphics NX is one example of an end-to-end product development solution for a comprehensive set of integrated design, engineering, and manufacturing applications.18

Roles for Computational Tools

As Figure 3-1 illustrates, the intercommunication and interoperation of engineering design and manufacturing computational tools can play a key role in establishing a strong bridge between design and manufacturing. During the study, the committee received multiple presentations that outlined a future vision of a stronger bridge and identified key difficulties that such a bridge could overcome, including:

-

Multiple models: During the engineering and manufacturing process, each stage of the process, and each engineering discipline, typically employs computational tools, and builds computational models, that are unique to that activity. A key challenge is the time and effort required to create multiple models, and the difficulty in ensuring that the models are consistent. It is common for a change in configuration of a design to be introduced in one model but not propagate to others, leading to inconsistent analyses of performance and failures in the field.

-

Design reuse: Because of the large number of noninteroperating engineering design and manufacturing software packages in use, it is not common for models of earlier similar designs to be reused and improved. Typically, a new model of an improved component or subsystem is constructed. In this environment, the incentive for engineers to reuse portions of earlier designs is limited.

-

"No-build" conditions: One of the key roles for a stronger bridge between engineering design and manufacturing was repeatedly identified during the course of the study as the need, particularly early in the engineering design process, to be able to easily identify designs that cannot be fabricated or assembled (no-build conditions). Without the ability to analyze early designs for their capability to be fabricated and assembled, unrealizable designs can persist late into the design process.

-

Early manufacturing considerations: Decisions made during the early engineering design stages typically determine 70 to 80 percent of the final cost of a product. Without a strong bridge between design and manufacturing, these decisions are commonly made with little information relating to costs and degree of difficulty of fabrication and assembly of a proposed design. This lack of manufacturing information in the early engineering design decision-making process is a key contributor to cost overruns late in the development cycle of a product.

Advancing the interoperability19 and composability20 of design and manufacturing software, particularly modeling and simulation, will contribute significantly to reducing the

| 17 | Defense Advanced Research Projects Agency, Rapid Design Exploration and Optimization. Available at: http://www.darpa.mil/dso/trans/swo.htm. Accessed February 2003. |

| 18 | Unigraphics PLM Solutions, an EDS company, NX: Overview. Available at: http://www.eds.com/products/nx/. Accessed May 2004. |

| 19 | In this context, interoperability is the ability to integrate some or all functions of more than one model or simulation during operation. It also describes the ability to use more than one model or simulation together to collaboratively model or simulate a common synthetic environment. |

| 20 | In this context, composability is the ability to select and assemble components of different models or simulations in various combinations into a complex software system. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

difficulties enumerated above.

-

Multiresolution21 interoperable models will reduce or eliminate the problems of multiple models and inconsistent analyses.

-

Intercommunication between multiple engineering and manufacturing software packages will greatly enhance the ability of engineers to retrieve models of earlier designs and establish a starting point for a next-generation product.

Box 3-2

Integrated Design of Unmanned Undersea Vehicles

Technological innovations are facilitating the expanded use of UUVs to perform complex and dangerous missions. a Improvements in sensors, guidance and control, power systems, and propulsion systems have dramatically improved the functionality, flexibility, and performance of these vehicles. As a result, commercial and military interest in these vehicles and in the potential dual-use capability of these enabling technologies is keen. Another important technological innovation involves the design process itself.

Researchers at the Applied Research Laboratory (ARL) at Pennsylvania State University recently developed an integrated design process utilizing advanced computational methods and successfully applied it to develop a UUV system. Development of a new design methodology was made necessary by contractual requirements that mandated substantially shorter design time and lower design and ownership costs than traditional design methodology could deliver. Another inducement was the expectation that this investment in developing an integrated system could spill over to other applications and projects in the future. By several measures the effort was a success, reducing project cost and development time from 3 to 4 years to 12 to 18 months.

The integrated design tools developed by ARL minimize the need for numerous and expensive interim experiments, relying more on fundamental, physics-based computational models of fluid dynamics. The design path is conceptually similar to conventional design paths, with tunnel testing replaced with computer simulations. For example, the propulsor design and analysis tool (PDAT) and Reynolds-averaged Navier-Stokes (RANS) analysis are used extensively to simulate the drag and stability of a vehicle under different design parameters, such as length, diameter, and nose and tail contours. Computer automated design is also used for mechanical design and structural analysis. The maneuverability of the vehicle is simulated using an ocean dynamics model and pitching motion simulations, essentially replacing physical with numerical experiments.

The first vehicle designed with this integrated approach was completed in 14 months below projected cost and verified at Lake Erie. The UUV requirements, however, are generally far less demanding than many other defense and commercial products. The mission requirements for the UUV include low speed, variable payloads, a small fleet of 15 to 20 vehicles, and long-endurance missions. The design criteria, in order of importance, include reliability, cost, maneuverability, efficiency, and stealth. Simulation-based design approaches are also under development for advanced torpedoes and submarines but are likely to be more challenging because stealth and other design criteria that are more difficult and costly to achieve are relatively more important in these applications.

In committing to such an effort, ARL had to assume the risk of failure to deliver the product by the imposed deadline at the contractual cost. Risk, therefore, is a major consideration in devoting the necessary time and money to develop integrated design tools. In ARL's case, it had the experience and scientific knowledge to assess these risks and decided that they were worth assuming given the expected benefits, including the immediate goal of meeting the contractual specifications and the longer-term goals

| 21 | Multiresolution modeling is defined as the representation of real-world systems at more than one level of resolution in a model with the level of resolution dynamically variable to meet the needs of the situation. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

of enhanced design capability and the greater likelihood of future contracts arising from improved goodwill with the project sponsors.

This case study illustrates that the keys to success of a new design strategy are advanced system requirements, reduced traditional development cycle times, reduced design and development costs, and reduced life-cycle or total ownership costs. These can be accomplished by early application of the systems design approach to increase the number and fidelity of design trade-off analysis.

The development of the UUV offers several lessons for bridging the gap between design and manufacturing. The physics-based models developed at ARL will play an important role in future integrated design efforts, particularly in simulating product performance. The UUV project at ARL also illustrates that tool development is not the result of a targeted research and development program but rather a means to an end. Perhaps this approach provides for more efficient tool development and integration. On the other hand, the lack of targeted research and development funding for tool integration may hinder the development of truly path-breaking integrated design simulation tools.

Another constraint encountered by ARL was computer resources. The computations were performed using networked computer workstations. Supercomputer resources were accessible but not as available on a real-time basis. Both computing environments, however, pose limits on the degree of accuracy in the models, particularly those that model cavitation and acoustics. As computer speeds increase in the future, modeling these processes at finer resolution will be possible.

Finally, the availability of U.S. citizens to work on these projects is limited and poses some real constraints on system integration development. This last constraint raises a number of training and education issues that may be particularly vexing for policy makers as they seek ways to foster a more efficient design process.

| a | M.J. Pierzga, "ARL Integrated Design Approach Using Computational Fluid Dynamics," and C.R. Zentner and W.M. Moyer, "Unmanned Undersea Vehicle Technology," Applied Research Laboratory Review, Pennsylvania State University, University Park, Pa., pp. 21-22, May, 2001. |

-

Testing and resolving no-build conditions early in the design process will reduce or eliminate the costs of maturing a design that cannot be fabricated or assembled late into the design process.

-

Perhaps the most critical improvement that can result from the integration of design and manufacturing models is that early design decisions can be made with consideration of the manufacturing ramifications. This integration can have a dramatic impact on the total product cost.

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

MATERIALS SCIENCE TOOLS

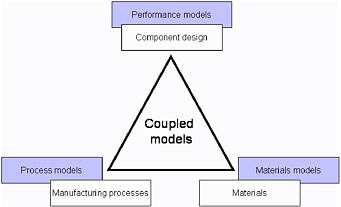

The goal of materials science and engineering is to link the structure and composition of materials with their manufacturing and properties—in other words, to develop models for materials behavior and performance during and after manufacturing. To achieve this, materials engineers utilize various tools that extend the entire length scale—from the electronic through the continuum scale. In fact, a long chain of successful models punctuates the history of materials science. Some models, such as those based on semiconductor device theory, rely on fundamental physics; others, such as annealing curves, arise from phenomenology; and most, such as phase diagrams, combine theory and observation. Within the past two decades, the subspecialty of computational materials science has provided additional modeling tools to the materials scientist. With applications ranging from empirical (expert systems) to fundamental (ab initio electronic structure calculations), computational materials science enables a more integral link between materials, design, and manufacturing as illustrated in Figure 3-7.

Current Tools

Research Codes

The purview of materials science and engineering is scientifically and technologically vast. Everything is made from materials, and the responsibilities of materials scientists range from the extraction of raw materials through processing and manufacturing to performance and reliability. Because of its broad scope, materials engineering is a decade or so behind other engineering disciplines in developing a core set of computational tools. For example, mechanical engineers are trained as undergraduate students in using finite element modeling (FEM) for heat and mass transfer, and a variety of commercial FEM packages are in wide industrial use. Materials scientists have no comparable computational training or tools. Even in cases where extensive scientific tools are available for prediction of basic materials properties or structures, their connectivity to real-life products and large-scale applications remains highly inadequate.

While computational materials science continues to progress, most of its applications remain research codes, with a few notable exceptions that are discussed below. Research codes are created for a variety of reasons, but their common characteristic is that they are written for a limited, specialized user base. Since users are assumed to be experts in both the scientific and computational aspects of the code, most research codes suffer from poor documentation, lack of a friendly user interface, platform incompatibility, no user support, and lack of extendibility. Furthermore, because research codes are usually written for a single purpose and customer, they may not even be numerically stable or scientifically correct. Of course, there are many examples of research codes that are well supported and responsive to customers, such as Surface Evolver, a code that calculates the wetting and spreading of liquids on surfaces.22 These are often labors of love, supported by a single researcher or group; the danger is that there is no guarantee of continuity of support as funding or personal circumstances change. On the positive side, many research codes are available without cost, and many researchers are delighted to reach new customers for their codes.

As a research code adds capabilities and demonstrates its utility to more users, it may graduate to a more sophisticated, stable, and supported application, either through commercialization23 or through the open-source paradigm.24 While a few materials science

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

codes have made this transition, none pervade the field, particularly within the ranks of the practicing materials designers and engineers.

Materials Science Models

While computational materials models are quite diverse, they can be loosely classified by length scale and scientific content into five categories: atomic-scale models, mesoscale models, continuum models, thermodynamic models, and databases. Within these categories, physics-based models, which utilize scientific theory to predict materials behavior, can be distinguished from empirical models, which rely on experimental observation and prediction of trends to do the same. Of course many models are hybrids, and include both fundamental science and empirical data.

Atomic-Scale Models. Atomic-scale simulations were among the first scientific computer applications. From simple early simulations, which treated atoms as classical hard spheres, these models have evolved into sophisticated tools for predicting a wide range of material behavior. As such, they represent a well-developed area of computational materials science.

Atomic-scale models are divided into two types: ab initio electronic structure calculations and empirical atomistic simulations. Electronic structure models solve simplified versions of the Schrödinger equations to generate the electron density profile in an array of atoms. From this profile, the position and bonding of every atom in the system can be determined. In theory, ab initio simulations provide a complete description of a system: its structure, thermodynamics, and properties for idealized situations and limited size.

In practice, ab initio simulations are limited in two ways. First, because the many-electron Schrödinger equation cannot be solved in closed form, all ab initio simulations utilize approximations. A major focus of researchers in this area is improving the approximations and quantifying their effects. However, their limitations remain important; for example, ab initio calculations are typically accurate to within 0.1 eV. This resolution limit can mean prediction of incorrect equilibrium crystal structures and errors in calculating melting points of up to 300 K. Second, electronic structure calculations are extremely computing intensive. Current heroic calculations may simulate 105 atoms for a nanosecond. Even with geometrically increasing computer resources, the capability to simulate a mole of atoms in an hour remains a long-term goal.

Despite their limitations, electronic structure calculations are valuable tools for elucidating the underlying physics of materials behavior. They have been particularly successful in calculating phase diagrams, crystal structures, solute distribution, and the structure and properties of internal defects. Because they include electronic bonding, ab initio simulations are the only first-principles method for predicting chemical interactions, including alloy chemistry, structure, and surface interactions. A large number of ab initio electronic structure codes have been developed, and several, such as VASP25 and WIEN2k,26 are commercially available and widely utilized. These simulations are still research codes; generating meaningful results requires graduate-level knowledge of both the technique and the particular code. However, both packages can be considered robust and supported tools.

| 24 | An example is the ABINIT code, whose main program allows one to find the total energy, charge density, and electronic structure of systems. More information is available at: http://www.abinit.org/. Accessed April 2004. |

| 25 | G. Kresse, "Vienna Ab-initio Simulation Package," October 12, 1999. Available at: http://cms.mpi.univie.ac.at/vasp. Accessed April 2004. |

| 26 | P. Blaha, K. Schwarz, G. Madsen, D. Kvasnicka, and J. Luitz, "WIEN2k," 2001. Available at: http://www.wien2k.at/. Accessed April 2004. |

Suggested Citation:"3 Tools for Virtual Design and Manufacturing." National Research Council. 2004. Retooling Manufacturing: Bridging Design, Materials, and Production. Washington, DC: The National Academies Press. doi: 10.17226/11049.

×

FIGURE 3-7 Models for linking design, manufacturing, and materials.